Testup is a frontend test automation system for web pages and front ends. But Testup does not only provide a tool to test front ends it also has a nice web front end itself. To assure that it remains like that, it should sound natural that we use our own software to test itself. Sounds twisted? Well…

Let’s first review some of the common challenges in UI testing.

| Ideal | Reality |

| Low Redundancy | A generic redesign of the application requires redundant test updates at many locations. |

| Full automation | Distinguishing design changes from design failures requires human intervention. |

| Reproducibility | UI is particularly prone to inconsistencies when interactions occur at super human speed and internal states are not yet prepared. |

| Transparent State | The internal state of server components are not accessible after the test ran through. |

| Locatablility | Failure that surface end of a complex interaction cannot easily be attributed to a single failed feature or step. |

| Speed | Certain features can only be reached after lengthy preparation phase. Causing for lengthy warm up periods. |

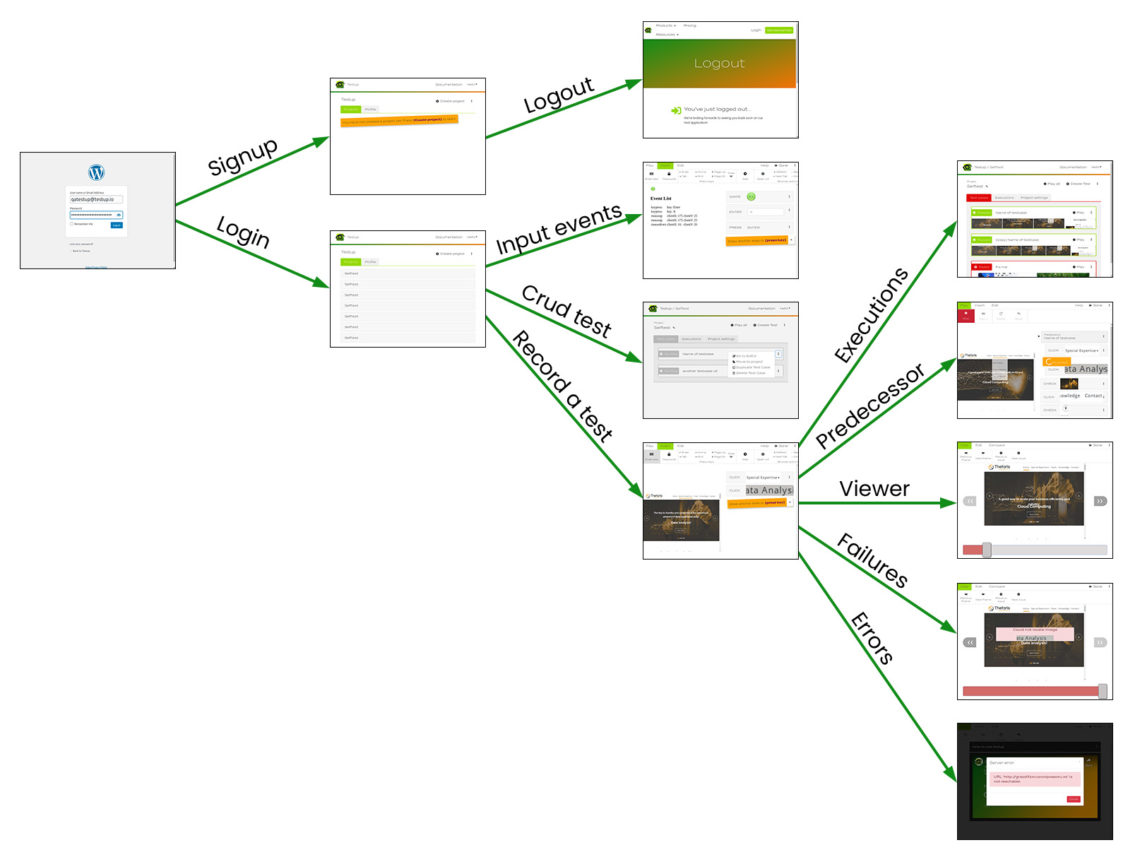

Currently we have 11 UI tests. Each of the tests is focused on a specific aspect of the test application. Each of these tests activates several features that are attributed to that feature. Some tests share common predecessors that prepare the software in a stable state that can be used as a basis for more advanced features that require a filled data base.

The following picture gives you an outline of the relevant screens from each test. Please note that some predecessor tests have multiple continuations. For each of these continuations the entire predecessor must be rerun from scratch to ensure a clean state.

How are we doing on our own ideals?

- Low redundancy

We have shared predecessors that are 100% reused. E.g. the login process is only defined once and reused everywhere.

Once the tests veer into different directions from a common predecessor there is no more sharing of test steps. The tests must be defined with the least possible overlap of accessed features. - Full automation

In the good case tests run fully autonomously from start to end. What if it breaks? First, all test checks occur on the graphical representation of the application. Hence, it is easy for a human to assess the difference between expected and observed state. Second, tests will try to recover from the visual change and present the differences to the user who then accepts the change. In our dreams it would be smart enough to close a cookie banner, but we are not there yet. - Reproducibility

It is not trivial to define tests that run consistently under variations of uncontrollable variables, e.g. server load, network latency, or expected changes in displayed calendars and times. This is certainly the hardest part and it totally relies on the usability of the software to make tracing and fixing issues as much fun as possible. (It cannot be explained until you use the software) - Transparent state

Our approach is fully graphical and as such we might see less of an internal state (e.g. the DOM). However, we do record the entire screen sequence and can thus highlight any early deviations from the base line. Hence, it is usually possible to navigate quickly to the earliest indication of an incorrect internal state. - Locatability

If tests were written to just replay recorded screen interactions it would be difficult to fail exactly at the point where the erroneous feature was executed. Instead we define tests such that they contain frequent checks and assertions. Adding an assertion is as easy as drawing a rectangle around the area you think should be graphically stable. - Speed

Let’s face it. UI tests are not unit tests. Our tests are currently taking our 30 minutes of CPU time and it’s growing. That’s why our service comes with access to a cluster that can run tests massively in parallel.

To summarize this post with a (biased) view on our own software I have to say that we are quite happy. We have made progress on most of our set goals. In terms of usability I am convinced we have already surpassed most competition. If you haven’t done yet please do sign up and share your views.

“Testing with a Twist” is a series of articles about testing with Testup. Up to now the following articles were published: